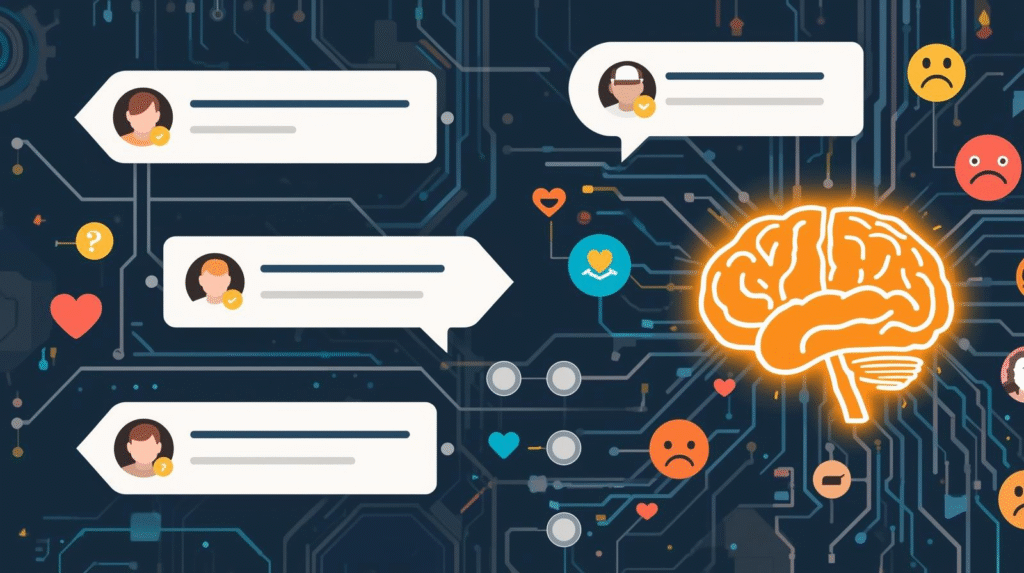

The year is 2025, and AI chatbots for mental health have gone from futuristic experiments to mainstream tools. They are everywhere — from therapy apps on your smartphone to corporate wellness programs and even university counseling services.

These AI therapy chatbots promise to provide emotional support, track moods, and guide users through stress, anxiety, and depression. For many people, they feel like a lifeline — available at any time of the day, free or cheap, and free of judgment.

But the rapid rise of these tools brings with it a serious debate: Are AI chatbots really helping mental health, or are they quietly causing more harm than good?

This blog will unpack that question in depth, examining their benefits, risks, ethical concerns, real-world applications, and future role in mental health.

What Are Mental Health Chatbots?

A mental health chatbot is a type of software powered by artificial intelligence, often using natural language processing, to simulate conversation with a human being.

These chatbots are designed to listen, respond, and provide techniques like guided meditation, mood tracking, or cognitive behavioral therapy (CBT)-based exercises. Popular examples include Woebot, Wysa, and Replika.

The attraction lies in their simplicity: unlike a therapist, a chatbot never judges, never gets tired, and is always available at the tap of a screen.

Why Are AI Chatbots So Popular in 2025?

There are three main reasons why AI in mental health has become one of the fastest-growing trends:

- Accessibility Gap – The shortage of therapists worldwide has left millions without support. Chatbots step in to fill that gap.

- Affordable Care – Traditional therapy is expensive, while AI counseling chatbots are usually free or low-cost.

- Stigma-Free Privacy – Many people feel safer opening up to an AI than facing the fear of being judged by another person.

This unique combination of availability, affordability, and anonymity explains why AI chatbot mental health support has exploded in 2025.

The Promises of AI Chatbots

Supporters argue that AI therapy apps 2025 deliver real value. They provide immediate comfort to someone experiencing stress or loneliness in the middle of the night. They can introduce practical exercises like breathing techniques, journaling prompts, or calming mindfulness sessions.

For workplaces and schools, they offer scalable solutions, reaching thousands of people without the cost of hiring an army of therapists. For individuals afraid of stigma, chatbots represent a safe, private space to express emotions.

In these ways, chatbots are helping millions to feel less isolated, and sometimes, just having a voice respond instantly is enough to make a person feel heard.

The Hidden Risks of AI Mental Health Chatbots

On the flip side, critics highlight the dangers of AI mental health chatbots. While they can simulate empathy, they cannot truly feel compassion. This difference matters, because the depth of understanding in therapy often depends on genuine human connection.

Worse, algorithms can misinterpret what a person is saying. Imagine a user expressing suicidal thoughts, and the bot responding with something generic or inappropriate — the consequences could be catastrophic.

Another concern is overreliance. People may lean too heavily on chatbots, postponing or avoiding real therapy. That delay can worsen conditions like depression or anxiety.

Finally, privacy and ethics cannot be ignored. Sensitive data collected during conversations may be stored or misused by companies. Questions about accountability remain unresolved: if an AI counseling chatbot gives harmful advice, who takes responsibility?

Comparison: AI Chatbots vs. Human Therapists

| Feature | AI Chatbots | Human Therapists |

|---|---|---|

| Availability | 24/7, instant | Limited, appointment-based |

| Cost | Mostly free or low-cost | Expensive ($60–$200 per session) |

| Empathy | Simulated empathy | Genuine emotional understanding |

| Accuracy | Algorithm-driven | Clinically trained expertise |

| Privacy | Depends on company policy | Legally protected confidentiality |

| Long-Term Healing | Limited coping support | Deep, personalized therapy |

This table makes one thing clear: AI vs. human therapist mental health is not a competition but a balance. Chatbots may complement professionals, but they cannot replace them.

Can AI Replace Therapists?

The short answer is no.

While AI therapy chatbots can provide comfort, reminders, and quick coping strategies, they cannot offer the nuanced understanding and deep healing that human therapists provide. Therapy often involves unpacking trauma, exploring emotions, and building trust — elements that no algorithm can replicate.

At best, AI is a supportive supplement. It can guide people until they find professional care, but it should never be seen as a cure or replacement.

Ethical Concerns of AI in Mental Health

The rise of AI in mental health support raises urgent ethical issues.

First, data privacy: conversations with chatbots involve sensitive personal disclosures. If this data is misused or leaked, it could harm users deeply.

Second, bias and inclusivity: AI systems are trained on data sets that may not reflect all cultures, languages, or contexts. A chatbot trained on Western data may not understand or help someone from another cultural background.

Third, accountability: if a chatbot misguides a user during a crisis, who is responsible — the developer, the company, or the AI itself?

Without stronger regulations, these ethical questions make chatbots a risky tool for vulnerable individuals.

Real-Life Applications in 2025

Despite concerns, digital mental health tools are now embedded in many areas of life. Universities deploy them during exam seasons to reduce student stress. Companies include them in employee wellness packages. Hospitals use them for preliminary mental health screenings before assigning patients to professionals.

These applications show that AI chatbots are not just theoretical; they are actively shaping how people experience mental health care today. But their effectiveness remains limited to supportive functions rather than deep therapy.

The Future of AI in Mental Health

The most likely future is a hybrid model where AI and humans work together.

Chatbots will handle basic emotional support, early screening, and coping exercises, while therapists will focus on complex cases requiring empathy and long-term care.

This collaboration could bridge the mental health gap, giving millions more people access to at least some level of support while keeping human therapists at the center of serious care.

Key Takeaways

- AI chatbots for mental health are booming in 2025 due to accessibility and affordability.

- They provide quick support but come with risks like lack of empathy, privacy issues, and unsafe advice.

- Human therapists remain irreplaceable for deep healing and complex emotional needs.

- The best future is AI and human therapists working together rather than competing.

Conclusion

So, are AI chatbots helping or harming mental health in 2025? The answer lies in balance.

They are excellent at providing immediate, stigma-free support, but they are dangerous if treated as replacements for professional care. While they fill gaps in accessibility, they cannot replicate empathy, cultural understanding, or the human connection that real therapy offers.

The safest path forward is using them as assistive companions, while keeping humans at the heart of mental health care. Technology can guide — but healing still requires human touch.

How do AI therapy chatbots work?

They use natural language processing (NLP) to understand user input and respond with therapeutic advice, coping strategies, or mental health resources.

Are AI therapy chatbots safe?

Yes, they are generally safe for basic mental health support, but they are not a replacement for professional therapy in serious cases.

Can AI therapy chatbots replace human therapists?

No, they complement human therapists by offering 24/7 support, but cannot fully replace personalized professional care.

What are the benefits of using AI therapy chatbots?

24/7 availability

Affordable mental health support

Immediate responses

Privacy and anonymity

What are the risks or limitations?

Limited understanding of complex emotions

May not detect crisis situations

Dependence without professional guidance