In 2025, AI therapy chatbots will have become a mainstream solution for mental health support across the globe. From busy professionals to students struggling with anxiety, these tools offer immediate access to therapeutic guidance. Platforms like Woebot, Wysa, and Replika have revolutionized the way people manage mental health, providing support at any hour, in multiple languages, and at minimal or no cost.

However, while the benefits of AI in mental health are evident, there are growing concerns around AI therapy risks, privacy issues, and ethical considerations. This guide explores the current landscape, highlighting what users need to know to navigate this emerging field safely and effectively.

The Benefits of AI Therapy Chatbots

AI therapy chatbots offer several advantages that make them appealing to users worldwide.

First and foremost, accessibility is a significant factor. Many individuals struggle to find a qualified therapist in their area, and scheduling conflicts can prevent regular appointments. AI chatbots can provide immediate support, breaking down these barriers.

Secondly, these platforms offer anonymity, which encourages people to discuss sensitive mental health issues they might be reluctant to share with a human therapist. This can reduce stigma and increase engagement.

Thirdly, consistency is a key strength. Unlike human therapists who may vary in approach, AI chatbots deliver structured interventions reliably, ensuring users receive a consistent level of support.

Finally, scalability enables these tools to serve large populations simultaneously, which is crucial given the global shortage of mental health professionals.

While these benefits are compelling, it is important to recognize that AI therapy chatbots are not a replacement for human therapists, and users should be aware of their limitations.

Understanding the Risks of AI Therapy Chatbots

Despite their advantages, AI therapy chatbots carry inherent risks. These include concerns around the accuracy of advice, data privacy, and emotional support.

One of the most critical issues is the lack of genuine human empathy. AI cannot fully understand nuanced emotions, and responses may occasionally feel robotic or dismissive. This limitation can affect the quality of support and the user’s overall experience.

Data privacy is another major concern. Users often share highly sensitive personal information, and privacy risks in AI therapy are real. Without robust security protocols, there is potential for unauthorized access or misuse of this data.

Over-reliance is also a risk. Users may become dependent on AI chatbots, neglecting the need for human interaction and professional therapy. While these tools can supplement care, they should not replace licensed therapists.

Finally, AI systems may sometimes provide inaccurate or harmful advice, particularly when addressing complex mental health issues. The algorithms behind chatbots rely on patterns in training data, which may not always account for individual circumstances.

Ethical Considerations in AI Therapy

The integration of AI in mental health raises important ethical questions.

Transparency is critical. Users must be fully informed that they are interacting with an AI system, not a human therapist. Similarly, the platforms must clearly communicate how personal data is collected, stored, and used.

Accountability is another key issue. Determining responsibility when AI provides incorrect guidance is complex. Should the developers, platform, or user be held accountable? Clear guidelines are still evolving in this area.

Bias in AI is a growing concern. AI mental health tools can inherit biases from their training data, which may result in skewed recommendations or culturally insensitive advice. Addressing these biases is vital to ensure safe and fair user experiences.

Informed consent must be prioritized. Users should understand both the potential benefits and limitations of AI therapy chatbots before engaging with them.

Regulatory Landscape in 2025

As AI therapy chatbots become increasingly prevalent, governments worldwide are implementing regulations to safeguard users.

In the United States, Illinois passed legislation that prohibits AI chatbots from performing professional therapy or making therapeutic decisions. This law aims to prevent potential harm caused by unregulated AI interventions.

Australia has raised concerns about the ethical management of AI within public services, particularly the deployment of chatbots like QChat, emphasizing the need for responsible oversight.

In the United Kingdom, authorities highlighted incidents of AI misuse, such as chatbots generating harmful content, prompting calls for stricter regulation and ethical guidelines.

Globally, these regulatory developments reflect a growing recognition that AI therapy regulation is essential to protect users and maintain trust in these platforms.

Guidelines for Safe Use

To use AI therapy chatbots responsibly, consider the following:

- Verify the credibility of the platform before use.

- Avoid sharing highly sensitive or confidential information.

- Treat AI chatbots as a supplement to professional care, not a replacement.

- Stay informed about evolving regulations and privacy policies.

Key Safety Measures

- Always check platform reputation and developer credentials.

- Limit disclosure of sensitive personal information.

- Use chatbots only as a supplementary tool.

- Keep track of AI advice and cross-verify with professionals.

- Stay updated on regulatory changes in your region.

Potential Impact on Global Mental Health

The global adoption of AI therapy chatbots has the potential to increase access to mental health support in underserved regions. By overcoming geographic and financial barriers, these tools can offer early interventions, potentially reducing the severity of mental health crises.

However, to maximize benefits, it is crucial to integrate AI solutions with existing healthcare systems, provide transparency, and maintain ethical standards. AI cannot yet replicate human intuition, cultural understanding, or nuanced emotional support.

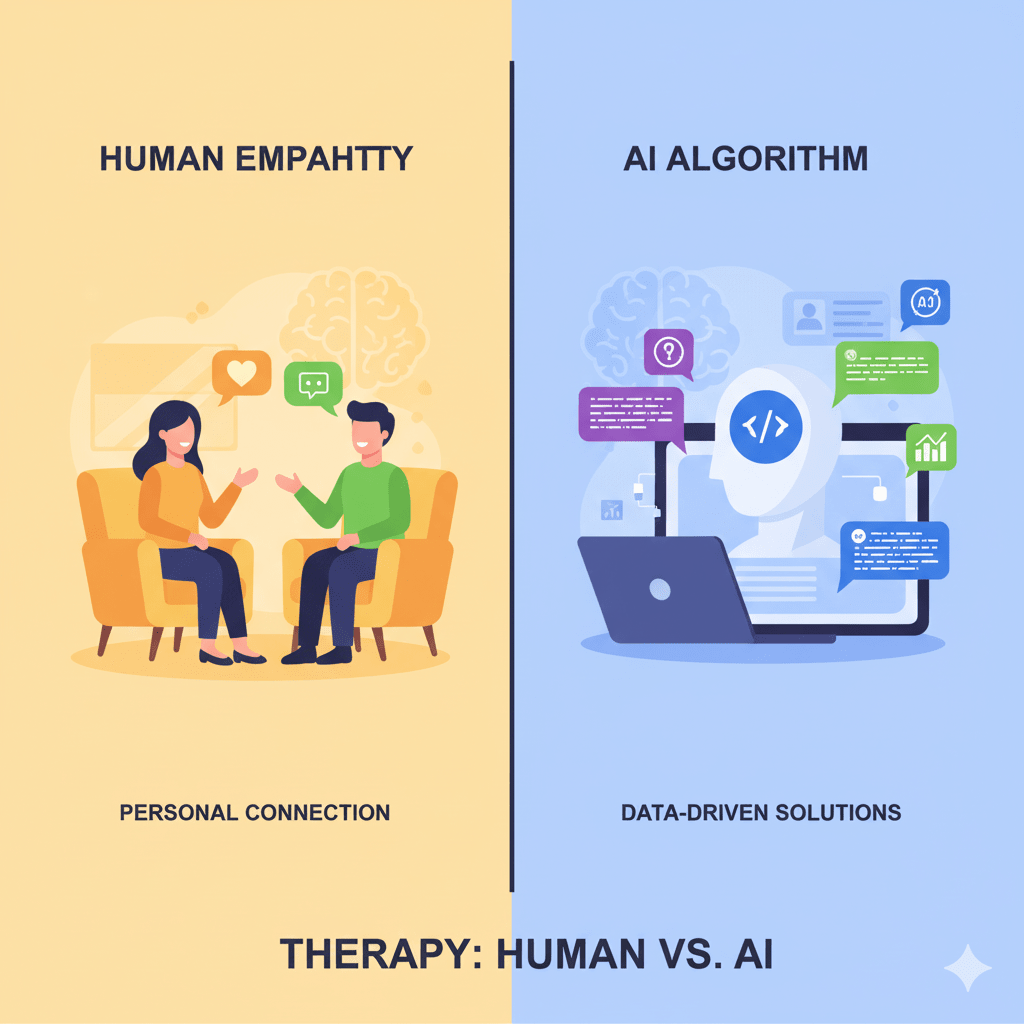

Comparison with Traditional Therapy

While AI therapy chatbots provide convenient, scalable support, they differ from traditional therapy in several ways:

- Human interaction: Traditional therapy offers nuanced emotional support that AI cannot replicate.

- Personalization: Human therapists tailor interventions to the individual, while AI relies on algorithmic patterns.

- Crisis management: Humans can recognize urgent situations and escalate appropriately, whereas AI might fail in emergencies.

- Consistency: AI ensures 24/7 access, whereas human availability is limited.

This comparison highlights that AI chatbots are best used in conjunction with professional care, particularly for complex or severe mental health issues.

Future of AI in Mental Health

By 2025, AI is poised to play an increasingly significant role in mental health care. Emerging trends include:

- Enhanced natural language understanding for more empathetic interactions.

- Integration with wearable devices to monitor real-time stress, sleep, and physiological responses.

- Increased focus on ethical AI, including bias mitigation and accountability frameworks.

- Collaboration with human therapists to create hybrid care models combining AI efficiency with human judgment.

These developments aim to improve accessibility and outcomes while addressing existing concerns about AI therapy risks.

Conclusion

AI therapy chatbots represent a transformative shift in mental health care. They offer accessibility, consistency, and scalability, addressing some of the key challenges in global mental health support.

However, users must remain cautious, recognizing the limitations of AI, the importance of privacy, and the need for professional oversight. By understanding the risks, regulations, and ethical considerations, individuals can make informed decisions, leveraging AI safely to enhance their mental health journey.

Remember: AI is a tool, not a replacement for human empathy and professional expertise. Staying informed, using platforms responsibly, and seeking human guidance when necessary are essential for safe and effective mental health support in 2025.

What are the risks of AI chatbots in therapy?

Risks include lack of human empathy, potential bias, inaccurate advice, and privacy concerns. Over-reliance can also be harmful.

How are AI therapy chatbots regulated globally?

Different countries have introduced regulations. For example, the US restricts therapeutic decision-making by AI, while the UK and Australia focus on ethical use and data protection.

Who is liable if AI therapy gives wrong advice?

Liability depends on the platform and local regulations. Clear accountability guidelines are still evolving in most regions.

Can AI chatbots replace human therapists?

No. AI chatbots are tools to supplement therapy, not replace human therapists, who provide empathy, personalization, and crisis intervention.